[Video] Putting the AI in R&D — with Badhri Srinivasan, Tony Wood, Rosana Kapeller, Hugo Ceulemans, Saurabh Saha and Shoibal Datta

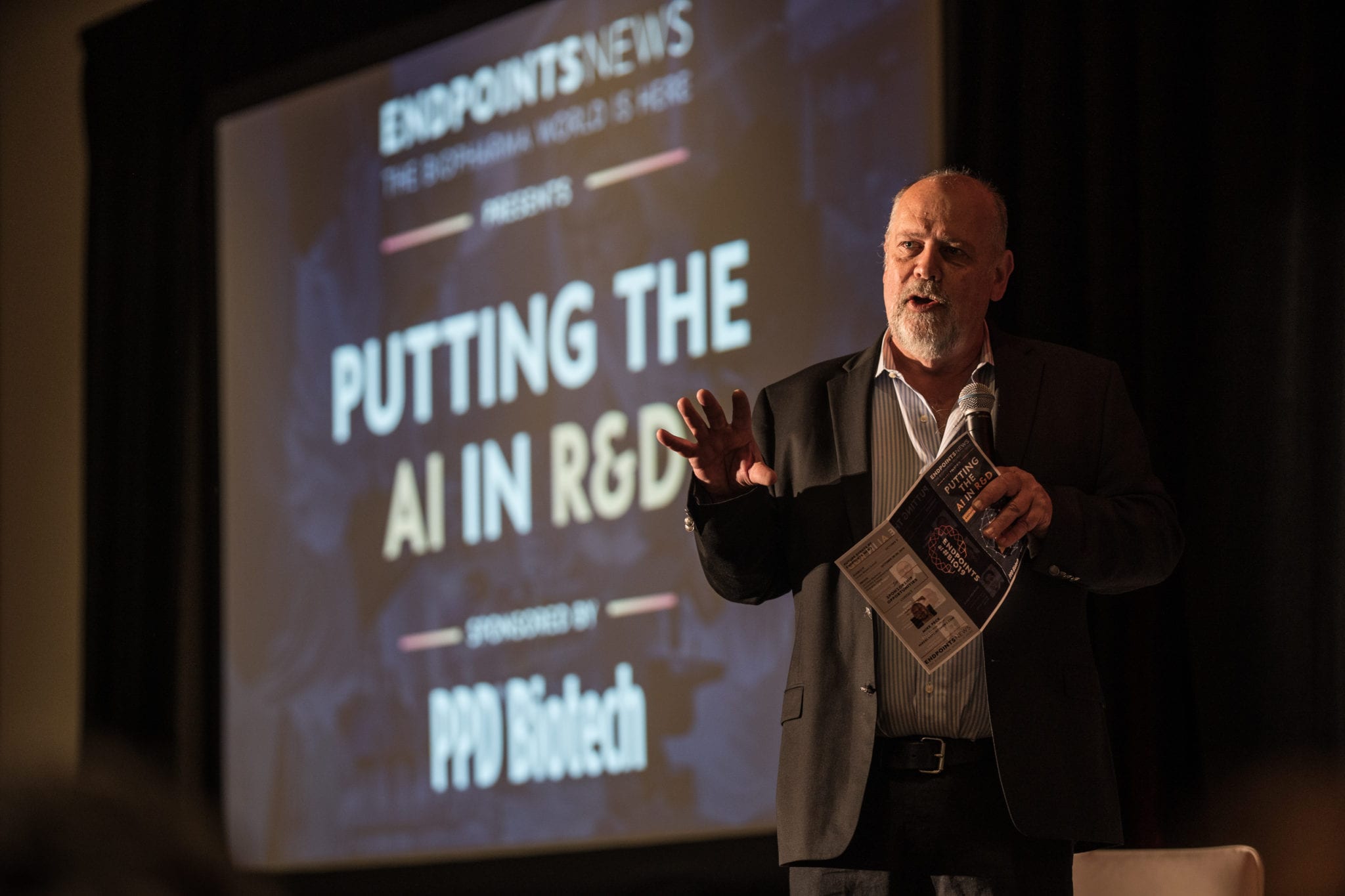

During BIO this year, I had a chance to moderate a panel among some of the top tech experts in biopharma on their real-world use of artificial intelligence in R&D. There’s been a lot said about the potential of AI, but I wanted to explore more about what some of the larger players are actually doing with this technology today, and how they see it advancing in the future. It was a fascinating exchange, which you can see here. The transcript has been edited for brevity and clarity. — John Carroll

Unlock this article instantly by becoming a free subscriber.

You’ll get access to free articles each month, plus you can customize what newsletters get delivered to your inbox each week, including breaking news.